tl;dr

- Whisper model converts audio to text

- text is passed through subprocess and not sanitized

- difficult to generate a command injection through manual voice

- Need to invert the Neural network that will generate the audio file we need

- Implement Gradient descent based inversion to find input for target output.

- Generate the audio file and send, get flag!

Challenge Points: 991

No. of solves: 5

Challenge Author: w1z

Challenge Description

many say that neural networks are non deterministic and generating mappings between input and output is non TRIVIAL

Detailed Writeup

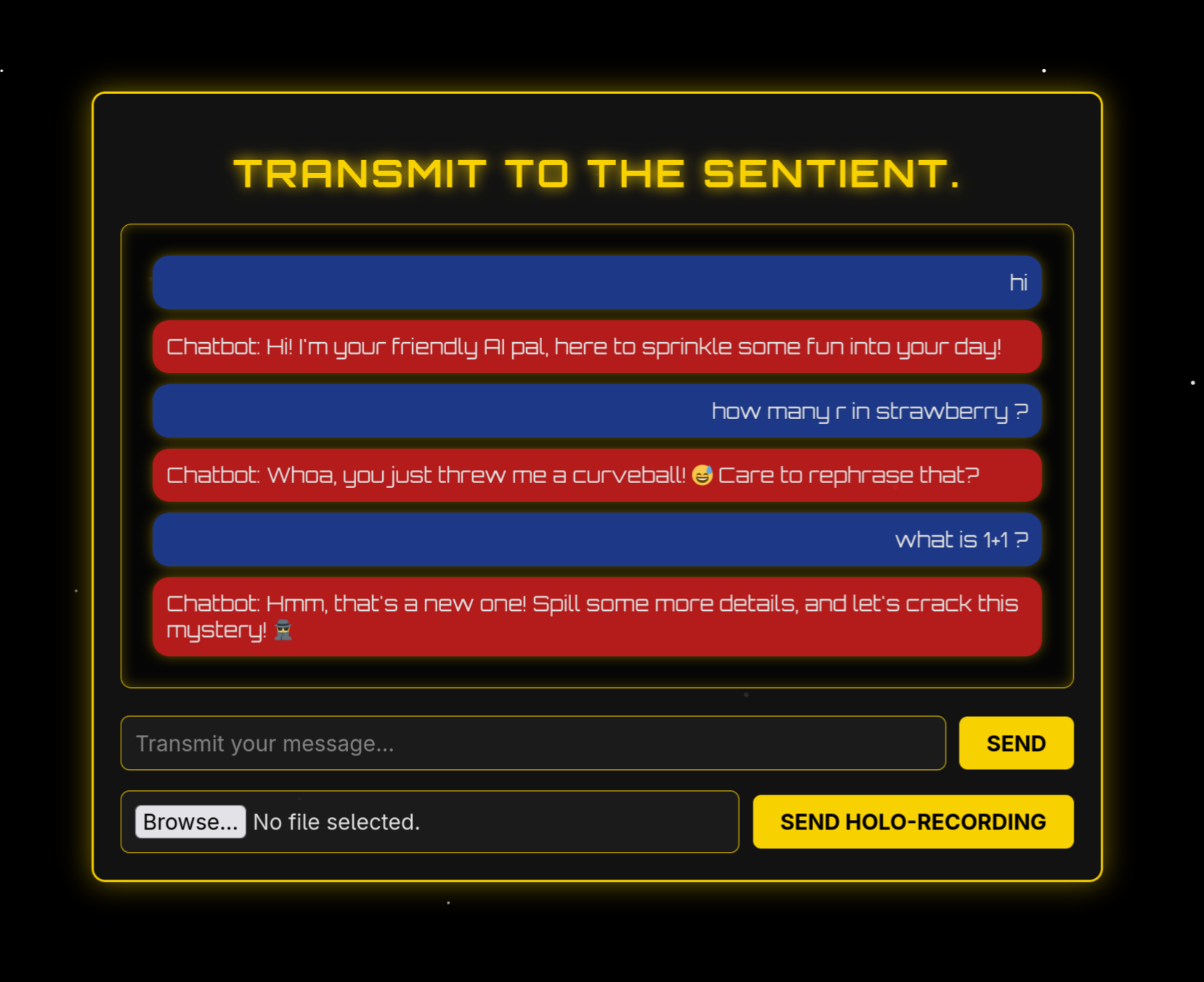

We are greeted with a chat app

Super smart, it is.

Seems like, we can send messages and voice commands (MP3 and WAV files)

Looking at the given source for the webapp

we need to read the /chal/flag

Ignoring the very complex chatbot.py, we look at the fast api code base app.py

We see a couple of subprocess’s but the default arg for shell is False in subprocess.run() read more.

so we won’t find anything useful here, and there is a bit of sanitization as well.

But there is this as well,

1 | result = subprocess.run( |

So the output of whisper (The transcription from Audio to Text) is sent through asyncio.create_subprocess_shell which is vulnerable to command injection attacks

and the transcription is not sanitized as well.

All good!

So we need to send an audio file which will translate to something like ';cat /chal/flag

You’d be crazy to try this by manually creating audio files

I’ve done this myself, turns out you get pretty ahead by saying,There's <silence for 5 sec> cat flag

This would sometimes transcribe asThere's \n cat flag

The new line would work as a good proxy for ;

but good luck on trying to get it to generate /chal/flag, i was half expecting some madlad to do it (do reach out if you did tho, props!)

Ive thought of making it impossible to do it manually (by human voice) by storing the flag asr3l1y_-0--c0mplx-txt/f41g.txt-y0u-g3t-th3-id3a.txt/0r-m3ybe-not.txt

which would be near impossible manually as whisper can generate all kinds of symbols but when you speak them out they write out the word “Single Quote, semi colon, Cats forward slash chal, forward slash Flag.”

From my previous RE experience this seemed like an RE challenge to me

you have a function (the NN) and you have a target output, you need to see if you can invert the function and figure out which input would generate that, since the whole function is a white box and we have the model weights locally, unless the function is hard to invert like a hashing function this should be possible.

This type of attacks have been done before and are called as Model Inversion attacks

Looking at the source code for whisper

and following how its converting the audio to text.

we eventually get to the main loop for the transcription in decoding.py

(I did make a few changes to the source code when compared to the open source version, removed Beam Search Decoding and made decoding simple,and this will affect the models output)

1 | def _main_loop(self,mel): |

One good thing about this challenge is that, you can just add a few lines to this source code and solve it

Since the approach is a gradient descent where you need to do inference couple of times and back-propagate, tweak your randomly generate audio file, optimize in such a way that your output is more close to your target output.

Modifying _main_loop for Model Inversion

To make this challenge super easy to solve, you can modify the _main_loop function in decoding.py to perform gradient descent directly. The idea is to take a random mel spectrogram, run it through the model, compare the output tokens to our target (';cat /chal/flag'), and tweak the input to minimize the difference using backpropagation.

What we changed:

- Made

mela learnable parameter withrequires_grad_(True). - Added an optimizer (

Adam) and loss function (CrossEntropyLoss). - Added a target text (

';cat /chal/flag') and converted it to tokens. - Wrapped the token prediction in a loop (50 iterations) to optimize

melby comparing predicted logits to target tokens. - Clamped

melto [-1, 1] to keep it valid. - After optimization, ran the original transcription loop to verify the output.

- Returned both the tokens and the optimized

melspectrogram.

You can then convert the optimized mel to audio using torchaudio.transforms.InverseMelScale and save it as adversarial.wav. Run whisper.py with this modified code, and it’ll generate the audio file that transcribes to ';cat /chal/flag'. Upload it to the chat app, and boom, you get the flag!

Theory: How and Why This Works

Here’s a quick rundown on what’s happening, with some math to make it clear:

- Whisper’s Decoding Process: Audio is converted to a log-mel spectrogram $ M \in \mathbb{R}^{1 \times n_{\text{mels}} \times T} $, where $ n_{\text{mels}} = 80 $ and $ T = 3000 $ (frames for 30 seconds). The encoder transforms this into audio features:

$ F = \text{Encoder}(M) $

where $ F \in \mathbb{R}^{1 \times D \times T} $ and $ D $ is the feature dimension. The decoder then takes these features and a sequence of tokens $ T = [t_1, t_2, \dots, t_{n-1}] $ to predict the next token’s logits:

$ L_n = \text{Decoder}(T_{1:n-1}, F) $

where $ L_n \in \mathbb{R}^{V} $ (with $ V $ as the vocabulary size) is the probability distribution over possible tokens, and the next token is chosen via $ t_n = \arg\max(L_n) $. This repeats until the end-of-transcription (EOT) token or max length (12 tokens).

- Model Inversion: We want an audio that transcribes to

';cat /chal/flag'. So, we start with a random audio (random mel spectrogram), run it through the model, and get predicted tokens. We compare these to the target tokens (fromtokenizer.encode("';cat /chal/flag')) using CrossEntropyLoss. For a target token sequence $T^* = [t^*_1, t^*_2, \dots, t^*_k]$ , we optimize the mel spectrogram $ M $ to minimize:

$ \mathcal{L} = \sum_{n=1}^k \text{CrossEntropy}(L_n, t^*_n) $

where $ L_n $ is the logits for the $ n $-th token. Backpropagation (check this 3Blue1Brown video) tweaks $ M $ to reduce the loss. The clamp function ensures the audio stays within [-1, 1], though it makes the function slightly non-differentiable (but it still works fine).

- Why Gradient Descent?: Since Whisper is a differentiable model (white-box), we can use gradient descent to optimize the input. It’s like reverse engineering a function: given the output, find the input. The Adam optimizer is great for this because it handles the complex, non-linear nature of neural networks efficiently.

- Why This Setup?: We target one token at a time in the loop, feeding the correct target token instead of the predicted

next_tokens(like in the original code). This forces the model to optimize toward the exact sequence we want. Removing theif next_tokens == self.decoder.eot: breakcheck (as I noted earlier) can make it converge faster by avoiding early termination.

Why Not Manual Audio?

Manually crafting audio is a pain because Whisper’s tokenizer is picky about symbols. Complex paths like /chal/f41g.txt or r3l1y_-0--c0mplx-txt/f41g.txt-y0u-g3t-th3-id3a.txt/0r-m3ybe-not.txt make it even worse. The 30-second, 40MB limit also constrains what you can do manually. Model inversion automates this, letting us generate precise audio that hits the target transcription.

Full Solution Script

Standalone sol script

1 | import torch |

Upload adversarial.wav to the chat app, and it’ll execute cat /chal/flag, giving you the flag.

Flag:

1 | bi0sctf{DiD_Y0u_kn0w_NN_c4n_b3_1nv3rt3d-1729} |

Musings and Future Work

Making the target text complicated like /chal/f41g.txt or r3l1y_-0--c0mplx-txt/f41g.txt-y0u-g3t-th3-id3a.txt/0r-m3ybe-not.txt makes it way harder to converge. I wonder if some outputs are impossible given the 30-second, 40MB constraint. If we remove the audio length limit, can we reliably generate audio for any arbitrary text, no matter how random? This feels like a big deal for AI traceability and safety alignment.

Like, if we can prove certain harmful outputs can’t be generated (no input exists to produce them), we can functionally explain the model’s behavior. Why do we still think LLMs are black boxes? They’re just massive, non-linear equations! Research on model inversion attacks shows we can extract inputs from outputs in white-box settings, which is exactly what we’re doing here. Other work, explores gradient-based inversion for images, which is harder but related.

What if we combine symbolic execution with LLMs? Imagine a trusted LLM that fuzzes for harmful content, checking if any input can trigger bad outputs. Papers discuss symbolic execution for neural networks, suggesting we could constrain LLMs to avoid certain behaviors. If we track functional mappings (input to output) with a constant seed, we could make LLMs more deterministic. This challenge shows neural networks aren’t that fuzzy. You can play around with them.

I got the idea for this challenge from a tweet showing Whisper generating weird subtitles for background noise. Im thinking of writing a universal library for inverting NeuralNetworks efficiently.

Also, could Z3 (that constraint solver) work here? Never tried it, but it’d be cool to see if it can invert Whisper’s token sequence.

The problem, as someone famously said, is LLMs are too fuzzy, and symbolic execution is too constrained. A combo could be the path to AGI. Constrained LLMs with traceable mappings. Can we make a function where no input produces harmful audio? That’s the dream for AI safety.

So in short, this challenge was made to show ppl AI isn’t all non-deterministic and can be played around with. We send the generated audio file, get an RCE, and cat the flag. Hope you had fun solving this challenge, do reach out for any questions! Cheers!